|

Reinforced robotic foundation model learning

VLA-RL aims to enable pretrained vision-language-action (VLA) models to effectively perform complex robotic manipulation tasks through online reinforcement learning. Our research focuses on addressing key limitations of current VLAs: (1) while offline pretraining often covers a narrow state-action distribution, we explore how to enhance generalization in unseen environments; (2) sparse reward signals hinder effective policy learning, motivating us to design vision-language dense reward models; (3) the inefficiency of large-scale online fine-tuning leads us to investigate scalable frameworks that improve sample efficiency. As a result, VLA-RL outperforms strong baselines by 4.5% on 40 LIBERO benchmark tasks, achieving state-of-the-art performance in skills like pouring, pipetting, stirring, and precise tool handoff. |

|

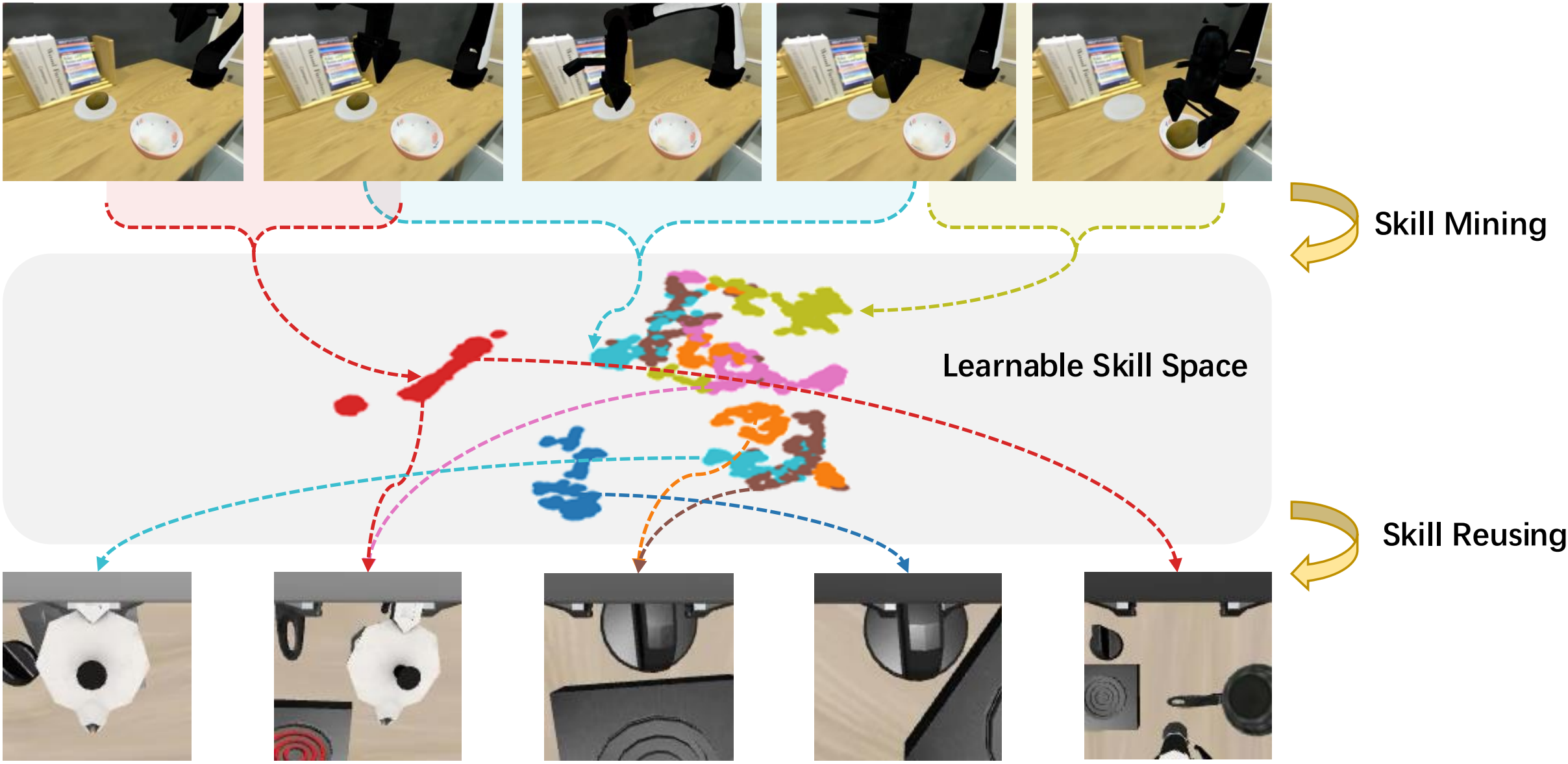

In embodied tasks, both environments and objectives are often highly complex. Nevertheless, long-horizon tasks can typically be decomposed into sequences of reusable skills. Our research focuses on building a skill-based Vision-Language-Action (VLA) framework that investigates: (1) how to automatically discover and represent meaningful skill spaces from data, (2) how to explicitly or implicitly select appropriate skills for a given context and promote skill reusability across tasks. Ultimately, we aim to improve the generalization and efficiency of VLA models in diverse and dynamic scenarios. |

|

Mobile Manipulation

Mobile manipulation is one of the fundamental problems of embodied intelligent robots, which requires collaboration between navigation and manipulation. Existing approaches struggle with task generalization and whole-body control. This project empowers mobile ability to any off-the-shelf manipulation models through policy transfer approaches to achieve a highly generalized mobile manipulation framework in zero-shot manner. Further, we propose hierarchical mobile manipulation visuomotor policy to achieve flexible and feasible whole-body control trajectories |

|

Bimanual Manipulation

Bimanual manipulation aims to perform general tasks conditioned on language instructions, enabling applications from household service to industrial assembly. However, collecting bimanual data is costly due to the high-dimensional action space, and the inherent complexity of dual-arm systems makes coordinated control particularly challenging. To overcome these issues, we propose a plug-and-play framework that leverages pretrained unimanual skills by dynamically scheduling their representations and aligning visual observations through soft workspace masking. Our method achieves state-of-the-art performance on complex bimanual tasks such as pouring and sweeping, demonstrating strong generalization and coordination with minimal supervision. |

|

A 3D world model empowers an agent to interactively explore imagined environments. By incorporating 3D information, the world model can more accurately reflect the physical properties of the real world. Furthermore, when conditioned on task-specific textual instructions, the 3D world model is capable of predicting corresponding dense rewards, thereby enabling more effective instruction-following and goal-directed behavior. |